From Day 1 of our founding, we have been committed to the vision of "Intelligence with Everyone."

Today, we are officially open-sourcing and launching MiniMax M2, a model born for Agents and code. At only 8% of the price of Claude Sonnet and twice the speed, it's available for free for a limited time!

- Top-tier Coding Capabilities: Built for end-to-end development workflows, it excels in various applications such as Claude Code, Cursor, Cline, Kilo Code, and Droid.

- Powerful Agentic Performance: It demonstrates outstanding planning and stable execution of complex, long-chain tool-calling tasks, coordinating calls to the Shell, Browser, Python code interpreter, and various MCP tools.

- Ultimate Cost-Effectiveness & Speed: Through efficient design of activated parameters, we have achieved the optimal balance of intelligence, speed, and cost.

Our team has been building a variety of Agents to help tackle the challenges of our company's rapid growth. These Agents are beginning to complete increasingly complex tasks, from analyzing online data and researching technical issues to daily programming, processing user feedback, and even screening HR resumes. These Agents, working alongside our team, are driving the company's development, building an AI-native organization that is evolving from developing AGI to advancing together with AGI. We have an ever-stronger conviction that AGI is a force of production, and Agents are an excellent vehicle for it, representing an evolution from the simple Q&A of conversational assistants to the independent completion of complex tasks by Agents.

However, we found that no single model could fully meet our needs for these Agents. The challenge lies in finding a model that strikes the right balance between performance, price, and inference speed—an almost "impossible triangle." The best overseas models offer good performance but are very expensive and relatively slow. Domestic models are cheaper, but there is a gap in their performance and speed. This has led to existing Agent products often being very expensive or slow to achieve good results. For instance, many Agent subscriptions cost tens or even hundreds of dollars per month, and completing a single task can often take hours.

We have been exploring whether it's possible to create a model that achieves a better balance of performance, price, and speed, thereby allowing more people to benefit from the intelligence boost of the Agent era and continuing our vision of "Intelligence with Everyone." This model needs a diverse range of capabilities, including programming, tool use, logical reasoning, and knowledge, all while having extremely fast inference speeds and very low deployment costs. To this end, we developed and have now open-sourced MiniMax M2.

Let's first look at the three most critical capabilities for an Agent: programming, tool use, and deep search. We compared M2 with several mainstream models:

As you can see, the model's abilities in tool use and deep search are very close to the best overseas models. While it is slightly behind the top overseas models in programming, it is already among the best in the domestic market.

There are several algorithmic and cognitive advancements behind this, which we will share in due course. But the core principle is simple: to create a model that meets our requirements, we must first be able to use it ourselves. To achieve this, our developers, including those in business and backend teams, worked alongside algorithm engineers, investing significant effort in building environments and evaluations, and are increasingly integrating it into their daily work.

Once we mastered these complex scenarios, we found that by transferring our accumulated methods to traditional large model tasks like knowledge and math, we could naturally achieve excellent results. For example, on the popular Artificial Analysis benchmark, which integrates 10 test tasks, our model ranked in the top five globally:

We have set the API price for the model at $0.30/¥2.1 RMB per million input tokens and $1.20/¥8.4 RMB per million output tokens, while providing an online inference service with a TPS (tokens per second) of around 100 (and rapidly improving). This price is 8% of Claude 4.5 Sonnet's, with nearly double the inference speed.

Over the past weekend, many enthusiastic developers from home and abroad have conducted extensive tests with us. To make it easier for everyone to explore the model's capabilities, we are extending the free trial period to November 7th, 00:00 UTC. At the same time, we have open-sourced the complete model weights on Hugging Face. Interested developers can deploy it themselves, with support already available from SGLang and vLLM.

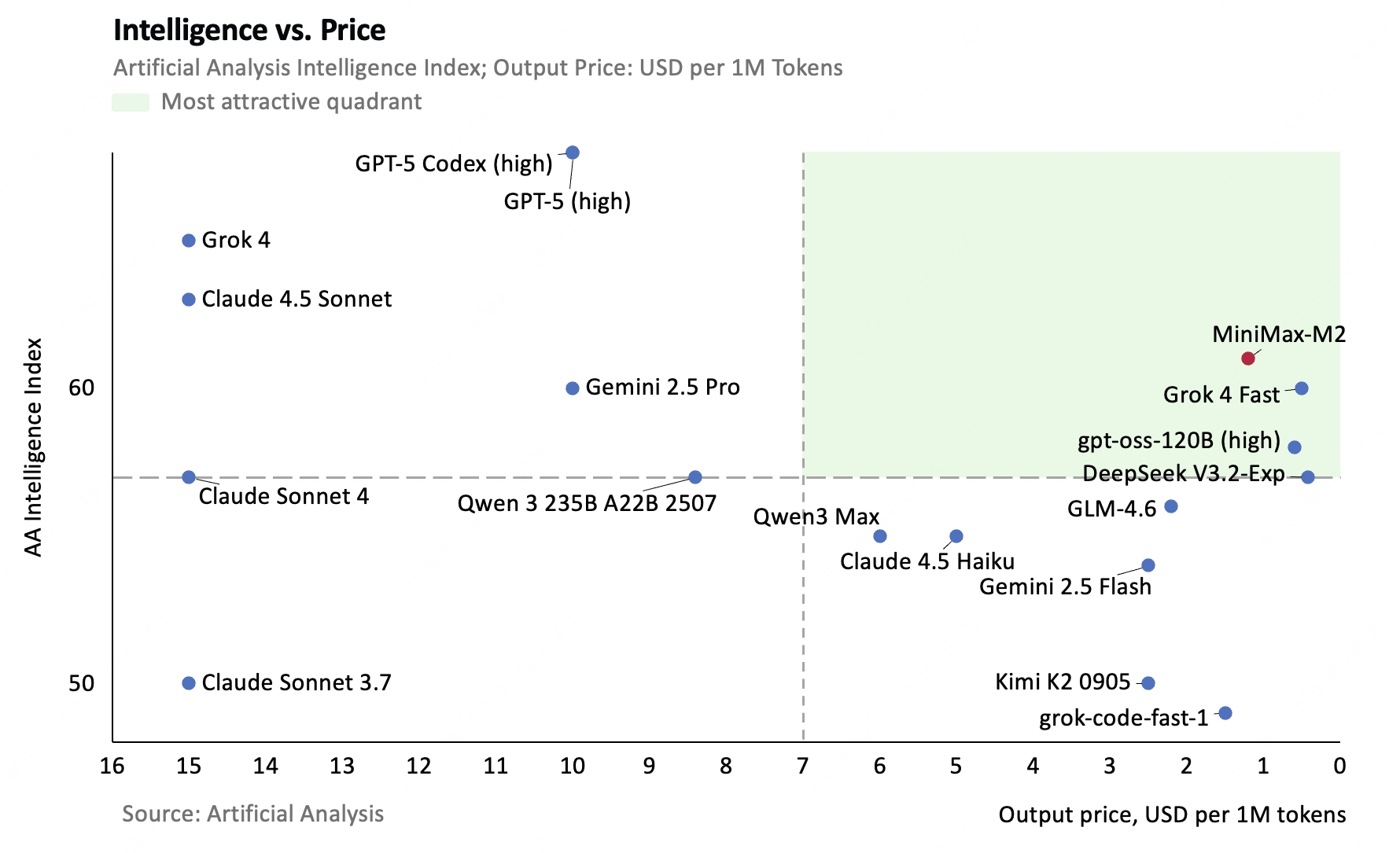

We believe this combination of price and inference speed is an excellent choice among the current mainstream models. Let's analyze this from two perspectives.

First, price versus performance. A suitable model should have good performance and be affordable, falling into the green area in the chart below. Here, we use the average score from the 10 test sets on Artificial Analysis to represent performance:

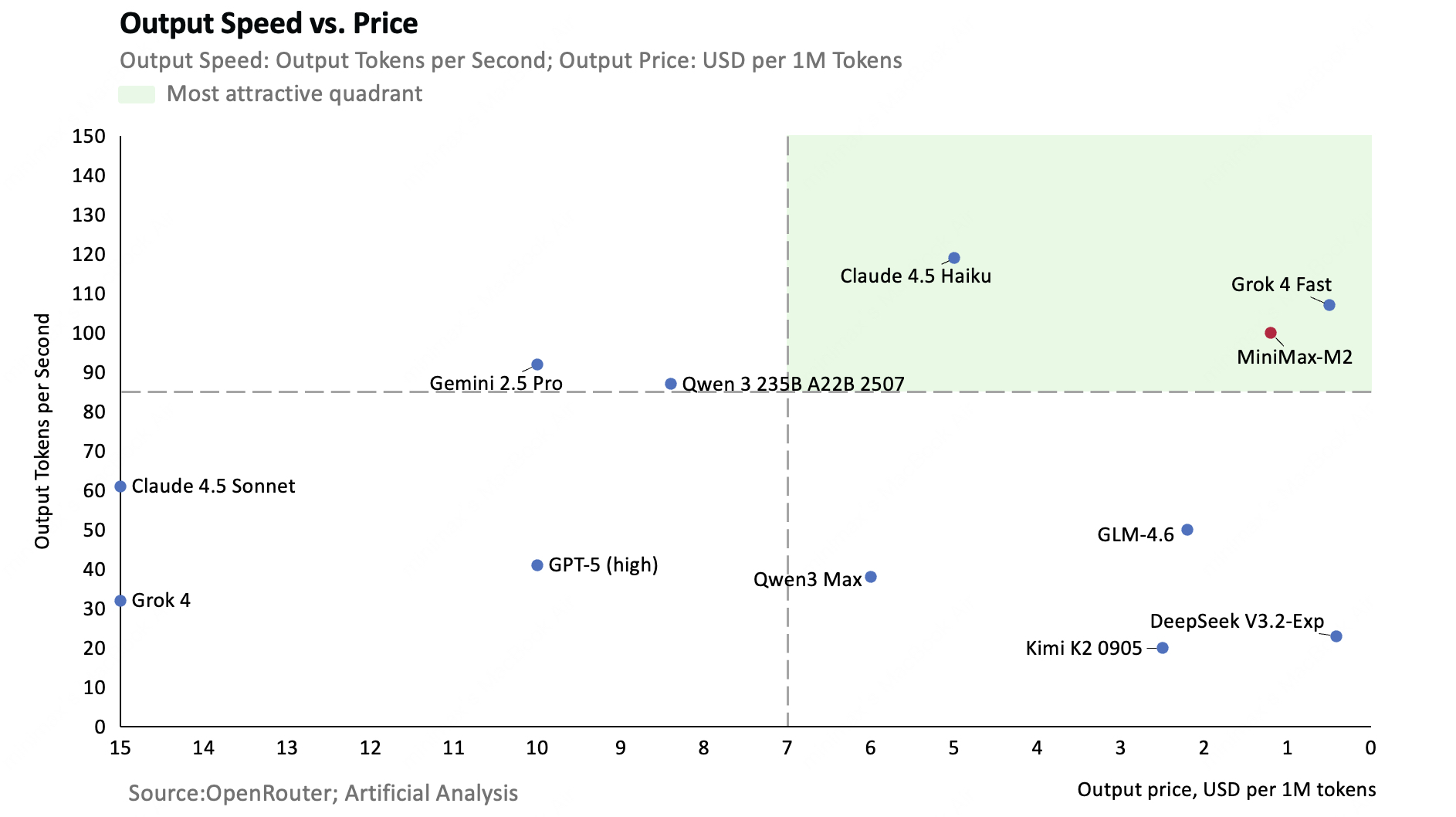

Next is price versus inference speed. When deploying a model, there is often a trade-off where a slower inference speed can lead to a lower price. An ideal model should be affordable and fast. We have compared several representative models:

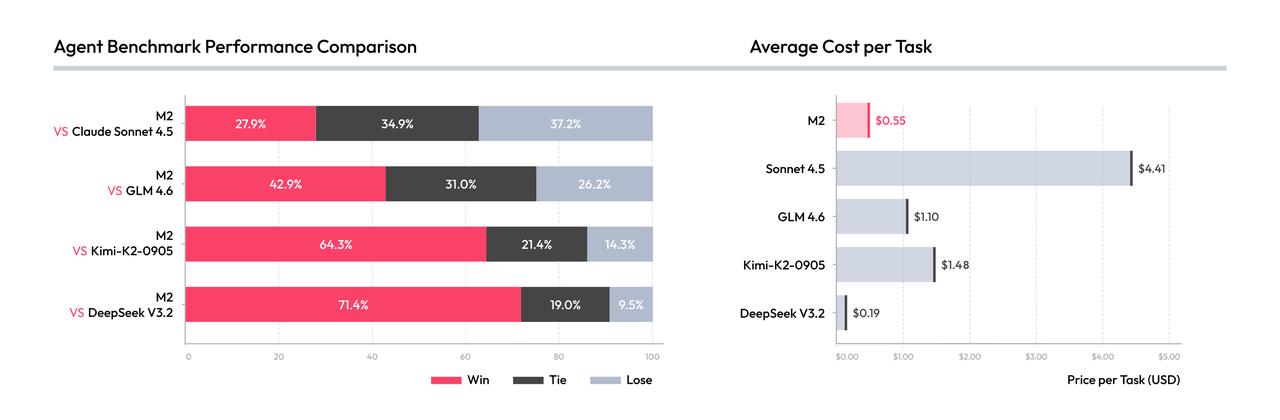

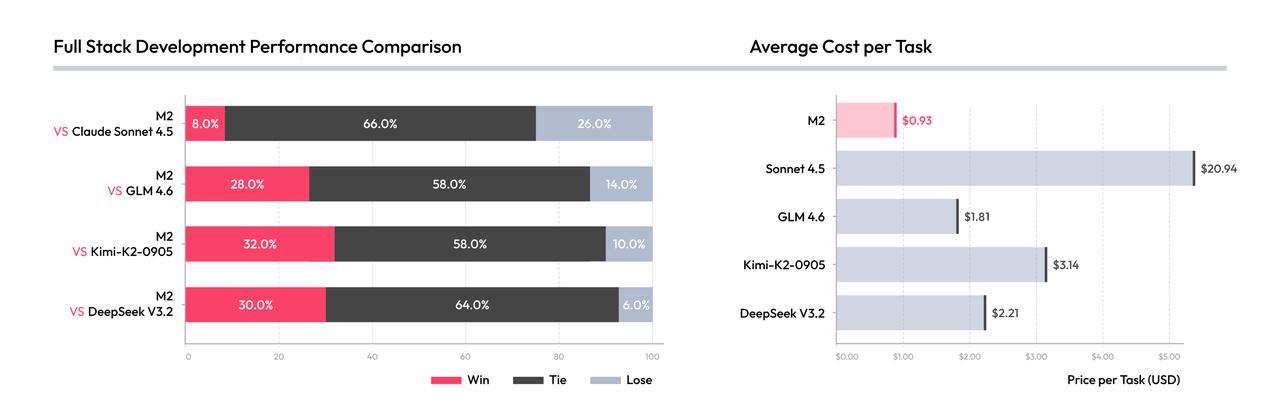

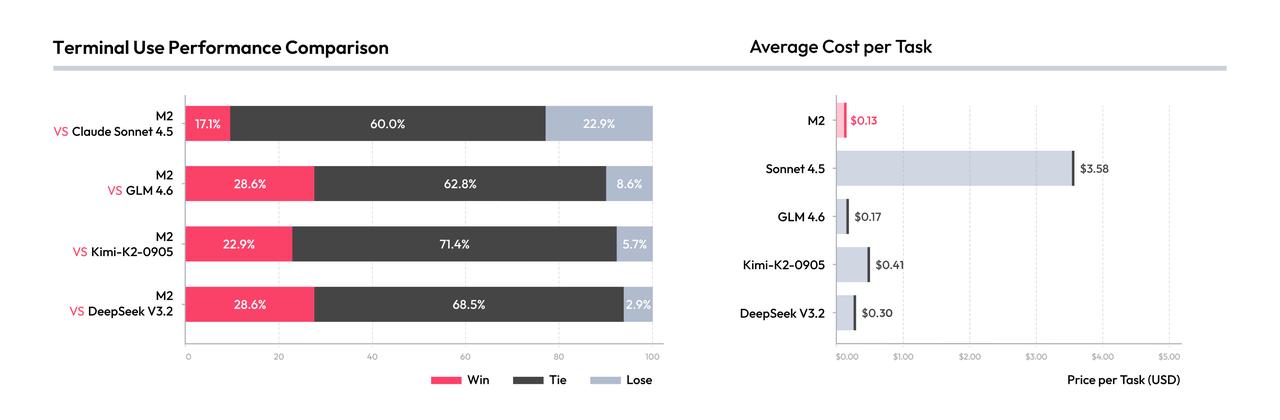

In addition to this analysis of standard data, we also conducted 1-on-1 comparisons of practical performance against Claude 4.5 Sonnet and several open-source models:

These test sets will be released on GitHub this week.

To make it easier for everyone to use Agent-related capabilities, we have launched our M2-powered Agent product in China and upgraded the overseas version. In MiniMax Agent, we offer two modes:

- Lightning Mode: A high-efficiency, high-speed Agent for instant output in scenarios like conversational Q&A, lightweight search, and simple coding tasks. It enhances the experience of dialogue-based products with powerful agentic capabilities.

- Pro Mode: Delivers professional agent capabilities with optimal performance on complex, long-running tasks. It excels at in-depth research, full-stack development, creating PPTs/reports, web development, and more.

Benefiting from M2's inherent inference speed, the M2-powered Agent is not only cost-effective but also completes complex tasks with significantly greater fluency.

We are currently offering MiniMax Agent for free, until our servers can't keep up.

- How to Use

The general-purpose Agent product, MiniMax Agent, powered by MiniMax-M2, is now fully open for use and free for a limited time:

https://agent.minimax.io/

- The MiniMax-M2 API is available on the MiniMax Open Platform and is free for a limited time:

https://platform.minimax.io/docs/api-reference/text-anthropic-api

- The MiniMax-M2 model weights have been open-sourced and can be deployed locally.

Local Deployment Guide

Download the model weights from the following Hugging Face repository:

https://huggingface.co/MiniMaxAI/MiniMax-M2

We recommend using vLLM or SGLang to deploy MiniMax-M2.

- vLLM: Please refer to the vLLM Deployment Guide.

https://huggingface.co/MiniMaxAI/MiniMax-M2/blob/main/docs/vllm_deploy_guide.md

- SGLang: Please refer to the SGLang Deployment Guide.

https://huggingface.co/MiniMaxAI/MiniMax-M2/blob/main/docs/sglang_deploy_guide.md

We recommend the following inference parameters for the best performance:

temperature=1.0, top_p = 0.95, top_k = 20

Tool Calling Guide:Please refer to the Tool Calling Guide.

https://huggingface.co/MiniMaxAI/MiniMax-M2/blob/main/docs/tool_calling_guide.md

temperature=1.0, top_p = 0.95, top_k = 20

Tool Calling Guide:Please refer to the Tool Calling Guide.

https://huggingface.co/MiniMaxAI/MiniMax-M2/blob/main/docs/tool_calling_guide.md

Intelligence with Everyone.