2026.1.14

MiniMax Open-Sources New Benchmark: Defining Production-Grade Standards for Coding Agent

In the practical deployment of Coding Agent, we have observed a recurring yet frequently overlooked phenomenon: User dissatisfaction with Agent often stems not from their inability to accomplish tasks, but from their failure to accomplish them properly.

By analyzing user experience feedback, we identified that the most frequent complaints center on: Agent failing to follow explicitly stated instructions. For instance, when users explicitly specify "do not use emojis" in the system prompt, the Agent nonetheless inserts smiley faces in code comments; when users request "backup before modification," the Agent directly executes rm -rf to delete files; when users define naming conventions in project documentation, the Agent disregards them entirely.

These issues share a common characteristic: The task may ultimately be completed, but the process violates established specifications. Users demand not merely "code that runs," but "code that adheres to team collaboration standards."

By analyzing user experience feedback, we identified that the most frequent complaints center on: Agent failing to follow explicitly stated instructions. For instance, when users explicitly specify "do not use emojis" in the system prompt, the Agent nonetheless inserts smiley faces in code comments; when users request "backup before modification," the Agent directly executes rm -rf to delete files; when users define naming conventions in project documentation, the Agent disregards them entirely.

These issues share a common characteristic: The task may ultimately be completed, but the process violates established specifications. Users demand not merely "code that runs," but "code that adheres to team collaboration standards."

Why Coding Agent Needs a New Benchmark

If we accept that only Coding Agent capable of adhering to process specifications can be confidently integrated into real-world software engineering workflows, then the current mainstream evaluation frameworks for Code Agent reveal a significant blind spot. With the proliferation of Agent products such as Claude Code, Codex, Cursor, and Windsurf, the community is establishing a repository protocol system designed specifically for Agent. Projects are no longer merely collections of code—they now encompass multi-layered specifications for collaboration:

- CLAUDE.md / AGENTS.md: Instructs Agent on "how to work with this project"—naming conventions, testing procedures, prohibited dangerous operations, etc.

- Skills: Encapsulates reusable workflows (e.g., "generate API documentation"); Agent must correctly identify trigger conditions and invoke them according to specifications

- Memory: Persists user preferences and task progress across sessions; Agent must continue work based on historical state rather than starting from scratch

The emergence of these mechanisms essentially constructs a multi-tiered instruction system. For example, when a user says "help me refactor this module," the Agent must simultaneously satisfy constraints across multiple levels: system-level safety rules (no direct code deletion), the user's immediate instructions (the extent of refactoring), engineering specifications explicitly documented in the repository, and decisions previously made in historical memory (to continue or override). More complex scenarios arise when these instruction sources conflict. A user might temporarily say "skip writing tests this time," while AGENTS.md explicitly mandates "every commit must have test coverage" — whom should the Agent obey?

However, an awkward problem persists: current academic leaderboards—whether SWE-bench verified or various terminal environment-based tests—are almost universally grounded in Outcome-based Metrics: Did the tests pass? Was the bug fixed? This result-oriented evaluation approach fundamentally fails to capture the model's output process within sandbox environments, let alone the authentic interaction experience in complex real-world scenarios. This ultimately leads to a misalignment between evaluation and actual usage contexts.

OctoCodingBench: Process-Oriented Evaluation for Engineering Reliability

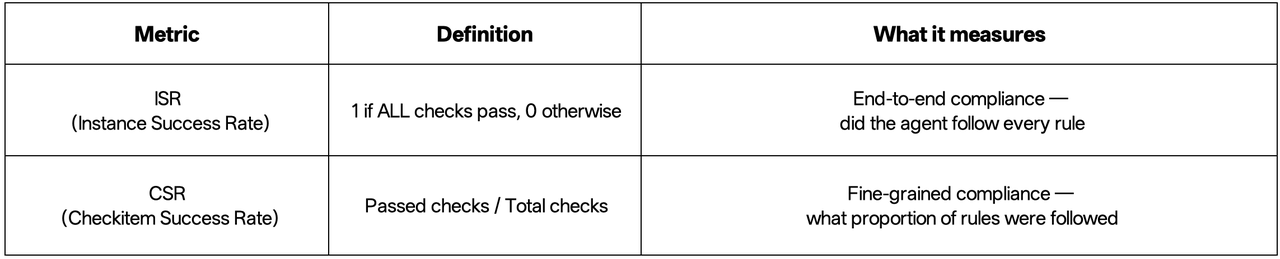

To address this issue, the evaluation paradigm itself requires a fundamental transformation — one that focuses on the output process itself. Motivated by this insight, we introduce OctoCodingBench, which evaluates from two dimensions: Check-level Success Rate (CSR) and Instance-level Success Rate (ISR). This approach aims to comprehensively observe process instruction non-compliance issues during task completion, approximating the real user experience as closely as possible.

Specifically, CSR measures what proportion of rules the Coding Agent follows, while ISR measures whether the Coding Agent adheres to every single rule.

Specifically, CSR measures what proportion of rules the Coding Agent follows, while ISR measures whether the Coding Agent adheres to every single rule.

A qualified Coding Agent must adhere to the following while completing tasks:

- Global constraints in the System Prompt (language, format, safety rules)

- Multi-turn instruction updates from User Query

- Scaffolding instructions provided by System Reminder

- Code style and commit specifications in Repository specification files (e.g., CLAUDE.md / AGENTS.md)

- Correct invocation procedures documented in Skills

- User preferences and project states recorded in Memory/Preferences

Based on this benchmark, we conducted extensive evaluations of existing open-source and closed-source models, yielding several insightful experimental findings:

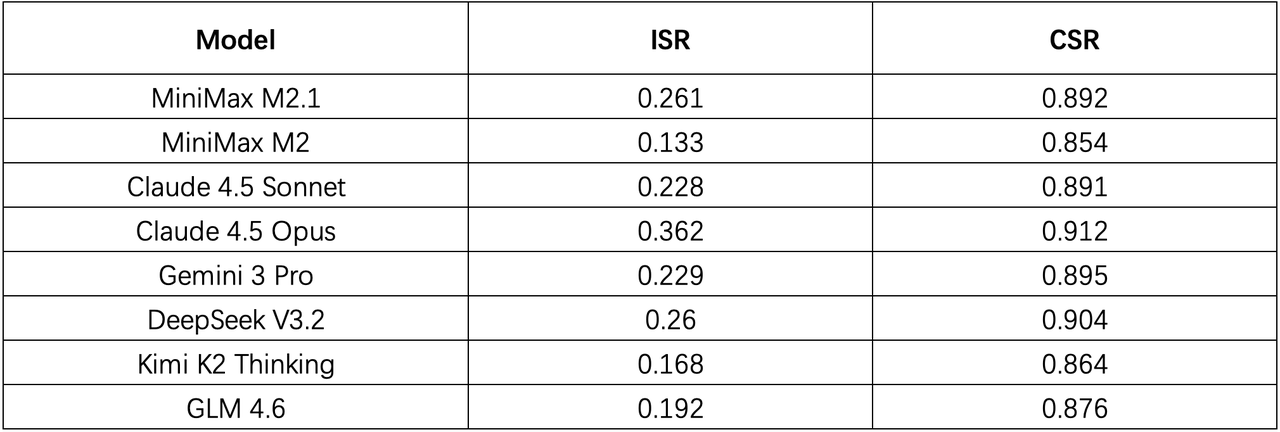

- All models achieve Check-level Success Rate (CSR) of 80%+, yet Instance-level Success Rate (ISR) remains only 10%-30%. In other words, models perform adequately on individual constraints, but when required to "satisfy all rules simultaneously," success rates plummet dramatically.

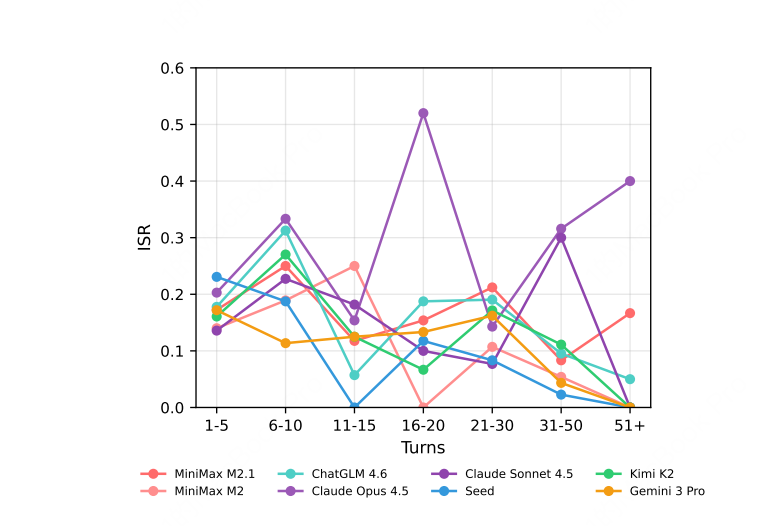

- The instruction-following capability of the vast majority of models degrades progressively as the number of turns increases. This corroborates the fragility of "process compliance" in long-sequence tasks.

- Current model performance generally falls short of production-grade requirements, with process compliance remaining a blind spot. According to leaderboard data, even the top-performing Claude 4.5 Opus achieves only 36.2% Instance-level Success Rate (ISR). This indicates that in nearly two-thirds of tasks, while the model may produce functional code, process specification violations persist. This low-score reality explicitly reveals a fact: "process specification compliance" for Coding Agent has yet to receive adequate attention and optimization from the industry. Current models are severely imbalanced—excelling at "Outcome Correctness" while neglecting "Process Compliance".

- Open-source models are rapidly catching up to closed-source models. Examining the leaderboard reveals that MiniMax M2.1 and DeepSeek V3.2 achieve ISR scores of 26.1% and 26% respectively, already surpassing the acknowledged powerful closed-source models Claude 4.5 Sonnet (22.8%) and Gemini 3 Pro (22.9%). Open-source models have demonstrated remarkably strong competitiveness.

Future Research Directions

We believe that training the next generation of Coding Agent requires the introduction of Process Supervision:

- Fine-grained process supervision: Beyond monitoring whether models "pass tests," supervision should extend to whether models "follow naming conventions," "correctly utilize Skills," "avoid leaking System information," and more;

- Hierarchical instruction following: Annotate instruction conflict scenarios in training data, enabling models to learn how to act according to instruction hierarchy priorities under conflicting conditions;

- Verifiable Checklists: Decompose "instruction following" from vague holistic impressions into atomic constraints amenable to automated verification, applicable both for evaluation and RL signal construction.

The capability boundary of Coding Agent is shifting from "can it write code that runs" to "can it collaboratively complete tasks under complex constraints." This also reflects a deeper transformation in product philosophy: Agent are not meant to replace human developers, but to become team members who understand and abide by the rules.

Therefore, Process Specification is the core proposition for the evolution of Coding Agent.

When we begin to focus on process rather than merely outcomes, when we enable evaluation systems to capture the dangerous pattern of "non-compliant yet successful," Coding Agent can truly transition from demo to production environments.

OctoCodingBench represents an infrastructure-level endeavor. We look forward to collaborating with the community to continue advancing in this direction.

Hugging Face: huggingface.co/datasets/MiniMaxAI/OctoCodingBench

MiniMax Coding Plan: https://platform.minimax.io/subscribe/coding-plan

IIntelligence with Everyone.