MiniMax M2.5

SOTA in Coding and Agent, designed for Agent Universe

Designed for high-throughput, low-latency production environments. M2.5 delivers industry-leading coding and reasoning capabilities at a fraction of the cost.

Research

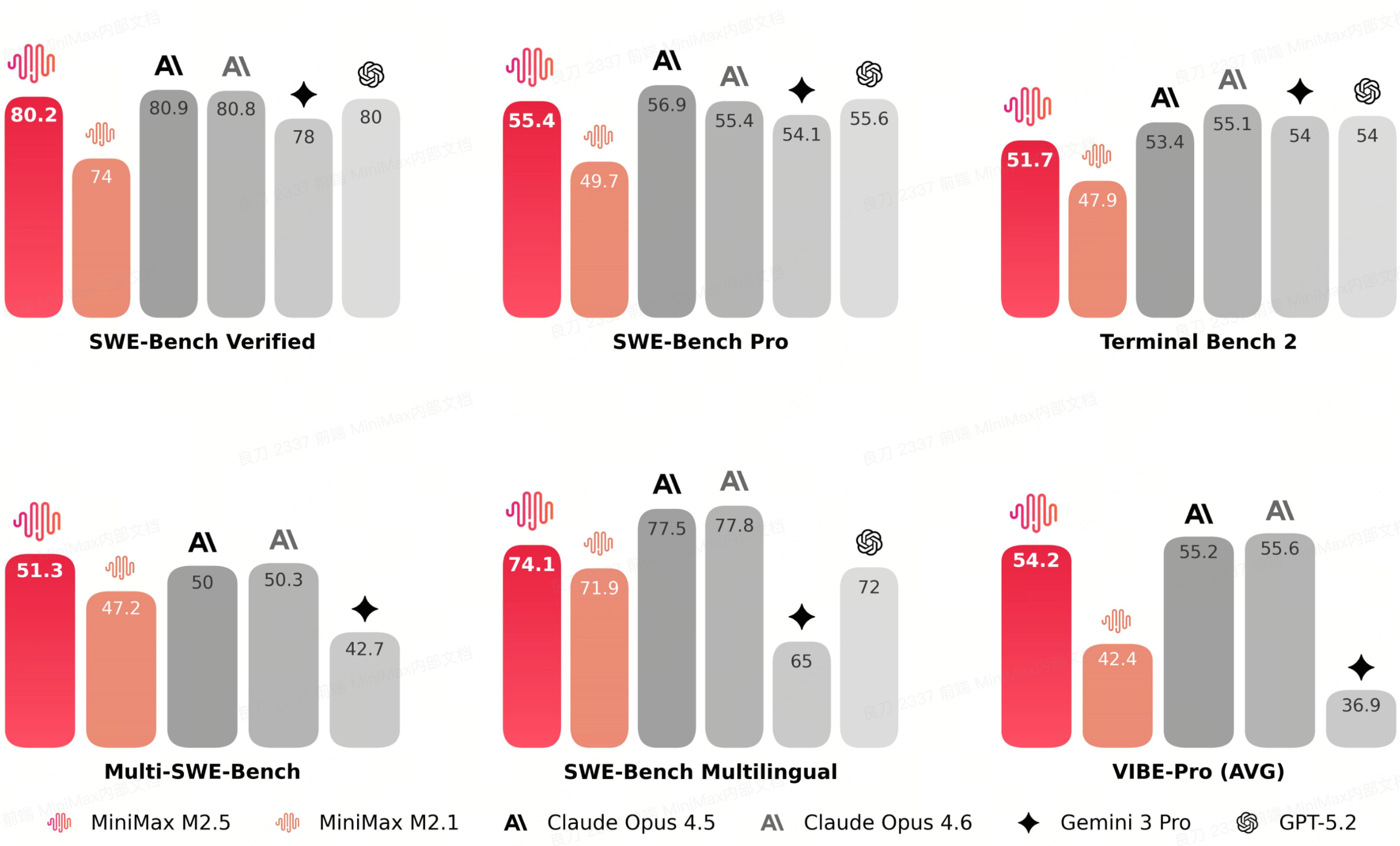

Performance Benchmark

Compared to its predecessor, M2.5 demonstrates greater decision-making maturity in handling Agentic tasks: it has learned to solve problems with more precise search iterations and better token efficiency.

M2.5 has achieved significant capability improvements in advanced workspace scenarios such as Word, PPT, Excel financial modeling, and more.

By combining reinforcement learning-optimized task decomposition with thinking token efficiency, M2.5 delivers significant advantages in both speed and cost when completing complex tasks.

M2.5 is available in 100 TPS and 50 TPS versions, with output pricing at just 1/10 to 1/20 of comparable models.

Coding Core Benchmark Scores Open-Source SOTA

M2.5 has reached the level of tier-one industry models. On the multilingual task benchmark Multi-SWE-Bench, M2.5 achieved the best performance in the industry.

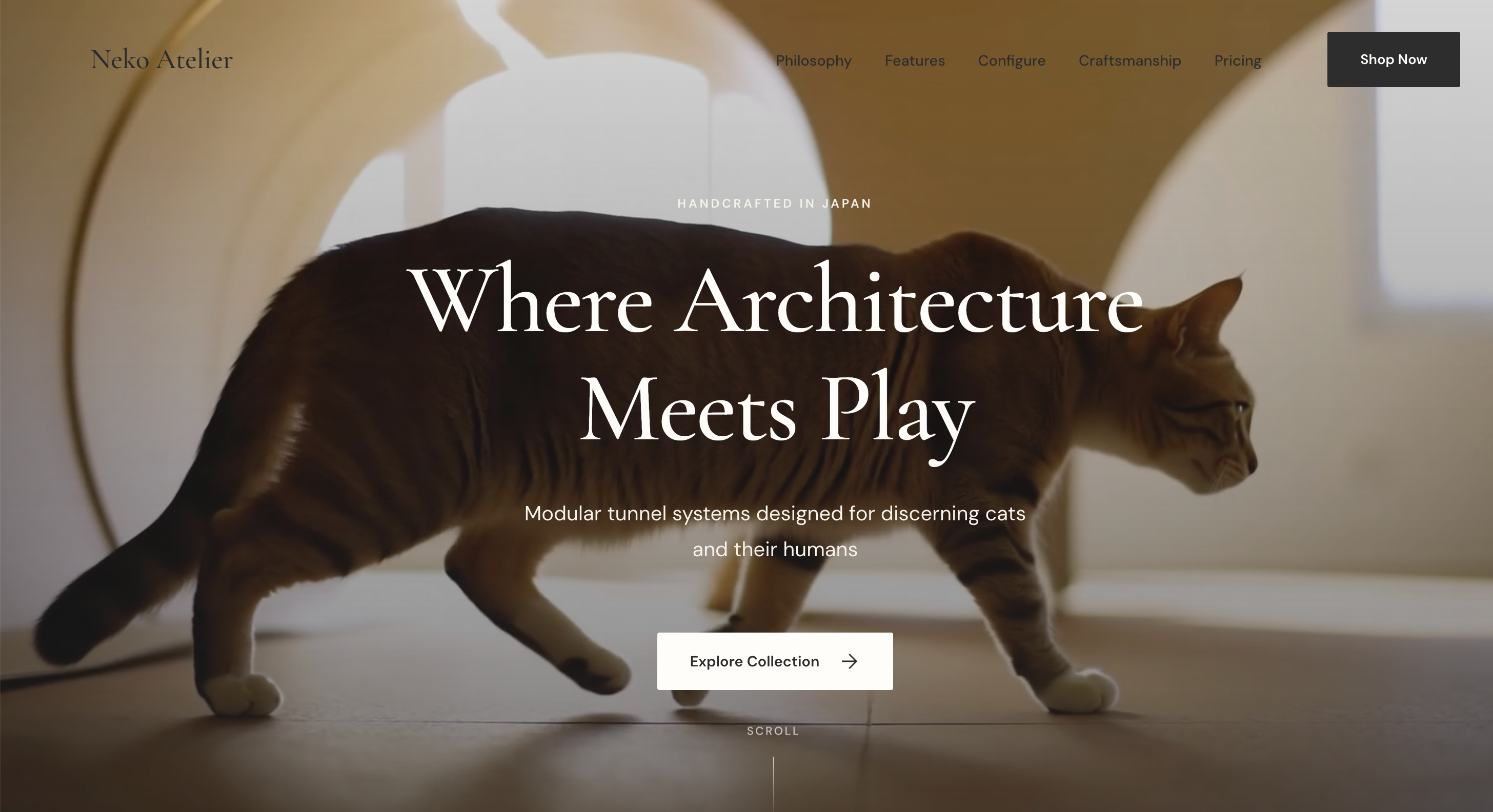

M2.5 Showcases

See What M2.5 Can Do

All examples below were generated by MiniMax M2.5 in a single shot. Click cards to enlarge.

Invite friends, earn benefits

Subscribe to a Coding Plan got a 10% discount, while the inviter got a 10% rebate!

Invite friends, earn benefits

Subscribe to a Coding Plan got a 10% discount, while the inviter got a 10% rebate!

01 / Access Method

Quick API Integration

Two API versions: M2.5 and M2.5-lightning with identical results but faster speed. Full automatic Cache support, no configuration needed.

02 / Access Method

For AI Coding Tools

Model weights have been fully open-sourced on HuggingFace. It is recommended to use vLLM or SGLang for deployment to achieve optimal performance.

Subscribe to the Coding Plan

The price remains unchanged, while performance has significantly improved. Coding Plan users now automatically benefit from higher inference speeds.

Read MoreOpen Platform Integration

Supports standard M2.5 and high TPS version of M2.5-lightning. Coding Plan users will automatically receive a larger share of lightning resource allocation.

Read MoreMiniMax Agent Integration

The general Agent platform based on M2.5 is now fully open. Experience the best programming assistance and logical reasoning capabilities without any development required.

Read MoreOpen Source and Local Deployment

We are committed to giving back to the community. M2.5 has been synchronously open-sourced on HuggingFace and GitHub, supporting private cluster deployment and fine-tuning.

Read More03 / Access Method

Local Private Deployment

Model weights are fully open-sourced on HuggingFace. We recommend SGLang or vLLM for optimal performance, with Transformers and Ktransformers also supported.