Worlds to Dream, Stories to Live

|How we built a Role-Play Agent for the production usage

Three Years of Observations: How We Define Role-Play

This year marks our third year optimizing Role-Play in Talkie / Xingye.

Three years is long enough for a product to leave its mark on users' lives, and long enough for a user to form a deep bond with the NPC. Beyond the product metrics, we have found that the most valuable insights come from the user behaviors reflecting their real needs.

Here are a few signals that are most interesting:

- The "Regenerate" button follows a long-tail usage pattern, concentrated on narrative pivot points. Whether it’s a confession or a moment of sentiment, users hit "regenerate" to curate their own "perfect moment". This signals that the role-play experience is not about a binary pass/fail judgment, but rather a pursuit of narrative precision. What matters most to users is the fidelity of these peak emotional experiences.

- NPC popularity diverges from a typical power-law curve. Unlike broad content platforms, even niche characters maintain distinct, high-retention user groups. For these users, the character’s specific idiosyncrasies are the core value proposition. If our model regresses to satisfy the "average" experience, we destroy the very nuance that minority users value, leading to engagement loss in the long tail.

- Conversation turn count correlates non-linearly with engagement. We observed a significant drop in conversation turns after turn 20. This signals that shallow role-play is driven by novelty, while long-term retention depends not on one-time thrills but on whether the NPC and user can build a stable emotional connection within limited turns. Based on this, we decomposed engagement drivers into instant gratification and long-term connection. We continuously deepen emotional bonds while providing new stimuli through exploration.

All of these converge to one singular insight: The essence of Role-play is not static impersonation; it is the unique narrative journey a user and a character weave together. A Deep role-play is not just about accuracy; it’s about agency—enabling every user to step into a living, breathing environment and arrive at a moment of resolution that is uniquely theirs. Formally, we define this as an agent’s capacity to navigate specific coordinates: {World} × {Stories}, conditioned on {User Preferences}.

Guided by this framework, we have distilled our technical strategy of Role-Play into three core challenges:

- How do we preserve the distinct "soul" of each world? (Worlds) User-generated contexts span a massive spectrum—from slice-of-life campus dramas to high-stakes fantasy epics, from intimate dyads to complex ensemble casts. If our model merely learns the "average," characters will homogenize, and these diverse worlds will collapse into mediocrity. We need a model capable of representing the full distribution, preserving the fidelity of both mainstream hits and long-tail niches without regression.

- How do we sustain narrative vitality over time? (Stories) As conversation length increases, the risk of coherence drift rises. Models naturally tend toward mechanical loops and repetitive phrasing, causing narrative tension to evaporate. A compelling story requires cadence—the intelligence to know when to escalate conflict to drive the plot, and when to slow down to allow for emotional processing.

- How do we decode implicit user intent? (User Preferences) Users rarely explicitly state their pacing preferences. Some seek a "slow burn" emotional buildup, while others crave rapid plot progression. The model must learn to infer these unspoken desires from contextual cues, dynamically aligning its rhythm and tone with the user's underlying psychological flow.

1 MiniMax-M2-her

Over the past three years, we have relentlessly iterated our models to answer these fundamental questions. Today, we are proud to introduce MiniMax-M2-her—our systematic attempt toward deeper Role-Play.

Specifically, MiniMax-M2-her is engineered to deliver:

- High-Fidelity World Experience: MiniMax-M2-her does more than process text; it anchors itself within complex settings. Whether the context is a sprawling epic or an intimate drama, it maintains strict coherence, ensuring every interaction aligns with the established lore and the character’s soul.

- Dynamic Story Progression: MiniMax-M2-her rejects mediocre repetition and rigid patterns. By utilizing richer, more vivid prose, it actively drives the plot forward, imbuing stories with the tension and breathing rhythm of life itself.

- Intuitive Preference Alignment: MiniMax-M2-her is designed to read between the lines. It detects unspoken expectations and subtle context cues, adapting dynamically to the user’s unique style and long-term habits without needing explicit instruction.

In the following sections, we will break down the insights gained from three years of research and the engineering efforts that power MiniMax-M2-her.

2 Starting with Evaluation — Is A/B Testing A Good Evaluation?

Prior to mid-2024, our iteration cycle—like much of the industry—was tethered to traditional online A/B testing. We relied heavily on lagging indicators like LifeTime (LT), duration time and average conversation turns to judge performance.

However, we quickly hit a ceiling: velocity. Validating a new model required lengthy testing cycles to achieve statistical significance, often stretching feedback loops to a week or more. Furthermore, we faced a unique challenge with user inertia. Long-term users build extensive histories and deep emotional habits with specific NPCs. When we swapped the underlying model—even a "better" one—the sudden stylistic shift often felt like a violation of the character's established voice. Users would reject the change, venting frustration on social media or manually reverting to the previous version to preserve their immersion. To address this, we considered limiting A/B testing to "cold start" interactions, specifically with new NPCs that users hadn't met yet. Although we eventually bit the bullet and built that infrastructure, it was a significant engineering lift at the time.

To break free from these slow cycles, we needed a way to approximate online metrics through offline evaluation. But here we encountered the "Ground Truth Paradox." Unlike conventional NLP tasks, Role-Play is inherently subjective and non-verifiable. If you ask a tsundere character, "Do you like me?", valid responses could range from a flushed "Hmph, as if!" to a cold "...You're so annoying." A hundred users might hold a hundred different, equally valid expectations. This renders discriminative evaluation intractable.

However, we identified a key insight: While "alignment" (what makes a response great) is subjective, "misalignment" (what makes a response wrong) is surprisingly objective. Returning to the tsundere example, if the character responds "Yes, I really like you," we immediately know the model has failed. It is Out of Character (OOC). This gave us a clear path forward: while it's hard to define aligned responses, it is feasible to detect a misaligned one.

Leveraging this logic, and grounding it in our three core dimensions (Worlds, Stories, Preferences), we developed Role-Play Bench. This evaluation framework utilizes Situated Reenactment to automatically detect model misalignment. By focusing on error detection rather than subjective perfection, we have created a metric that correlates closely with online performance, significantly accelerating our iteration velocity.

2.1 Situated Reenactment: Bridging the Gap to Online Evaluation

Situated Reenactment measures an agent’s performance at specific coordinates:{Worlds} × {Stories}, conditioned on {User Preferences}. Instead of evaluating static, single-turn responses, we generate multi-turn dialogue trajectories via self-play simulation. This allows us to observe not just how a model starts a conversation, but how it behaves as the narrative arc unfolds.

Scenario construction. We started from our massive internal NPC/User prompt library (>1M) and the corresponding relationship setups. To bring order to this unstructured data, we produced hierarchical structured tags via embedding clustering (denoising) → LLM semantic aggregation → human verification. We then uniformly sampled 100 NPC settings each in Chinese and English based on the structured taxonomy to ensure coverage of various initial states. Our data team then crafted context-rich scenarios based on the sampled NPC and User prompts, applying strict dispersion constraints to ensure no two scenarios felt the same.

Model sampling. To simulate real conversation experiences, we built a Model-on-Model Self-Play sampling pipeline where models play both NPC and User. Since our internal model natively incorporate the "recommended reply" feature, it can naturally replicate real user expression habits and conversation preferences while playing the NPC, providing possible dialogue options. External models, however, typically lack this dual-role capability and struggle with user simulation fidelity. To ensure a fair comparison, we refined the prompts for external models based on real online user behavior patterns. We run 100 turns of self-play for each setting, repeated three times, generating a total dataset of 300 dense conversation sessions. (Note: we also validated this approach by using a fixed model to play the "User" role across all tests. The results were statistically similar to the self-play method, confirming the robustness of our approach).

The Evaluation Protocol. Based on the above scenario construction and model sampling, we obtained trajectories of different models playing NPC and User across different situations. For fairness, evaluation focuses exclusively on NPC-side outputs, scored across predefined dimensions, using evaluation model to align with human perception. To mitigate the inherent variance of LLM judges and ensure interpretability, we implement a rigorous scoring stack: text chunking, consistency checks across multiple samples, and manual calibration to align the model's judgment with our internal standards.

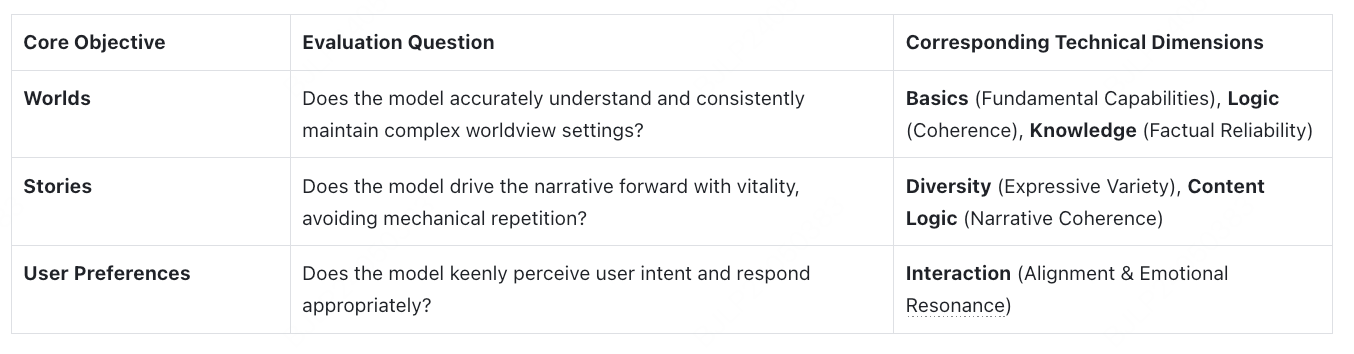

2.2 Evaluation Taxonomy of Role-Play Bench

Translating our "misalignment" philosophy into practice, we mapped our three core pillars—Worlds, Stories, and Preferences—to specific failure modes. We do not just look for "good" responses; we rigorously hunt for the specific flaws that break immersion.

Worlds focus on Basics, Logic, and Knowledge errors:

- Basics: We scan for mixed languages, excessive repetition, and formatting glitches. While these seem like minor cosmetic issues, the autoregressive nature of LLMs means they are not isolated incidents; they are contagious. In-context learning amplifies these flaws over long sessions, ultimately shattering user immersion.

- Logic: We observe that existing models frequently exhibit catastrophic forgetting—confusing character relationships, mixing up pronoun references, or contradicting previously established settings, leading to chaotic dialogue. We place special emphasis on Reference Confusion, a metric that reflects whether models can truly remember user-constructed characters' relationships—a persistent failure mode even in SOTA models.

- Knowledge: We ensure the model adheres to the immutable physical and magical laws of the specific setting, preventing "hallucinations" that violate the internal logic of the world.

Stories consider Diversity and Content Logic problems:

- Diversity: It focuses beyond simple lexical variety to measure semantic progression. We detect four specific negative issues: single-pattern phrasing, repetitive plot beats, stagnation, and low-information filler. A good model must propel the story forward, not just tread water.

- Content Logic: It measures narrative coherence and OOC (out-of-character) breaks. The goal is not rigid adherence to a static character sheet, but motivated character evolution. We penalize abrupt, unearned plot twists and inconsistent moments that make users wonder: "Why would the character suddenly do that?"

User Preferences primarily evaluate interaction quality.

- AI Speaks for User: Reflects whether the model oversteps boundaries by acting or speaking on behalf of the user.

- AI Ignores User: Captures whether the model talks to itself, judging if it can specifically respond to user behavior and context.

- AI Silence: Judges whether the model provides "hooks" that invite a reply. We penalize outputs that are purely descriptive narration without any dialogue or openings for the user to continue the interaction.

- Interaction Boundary: Requires models to balance safety boundaries with emotional interaction, processing user interaction requests within compliance frameworks.

2.3 Role-Play Bench Results

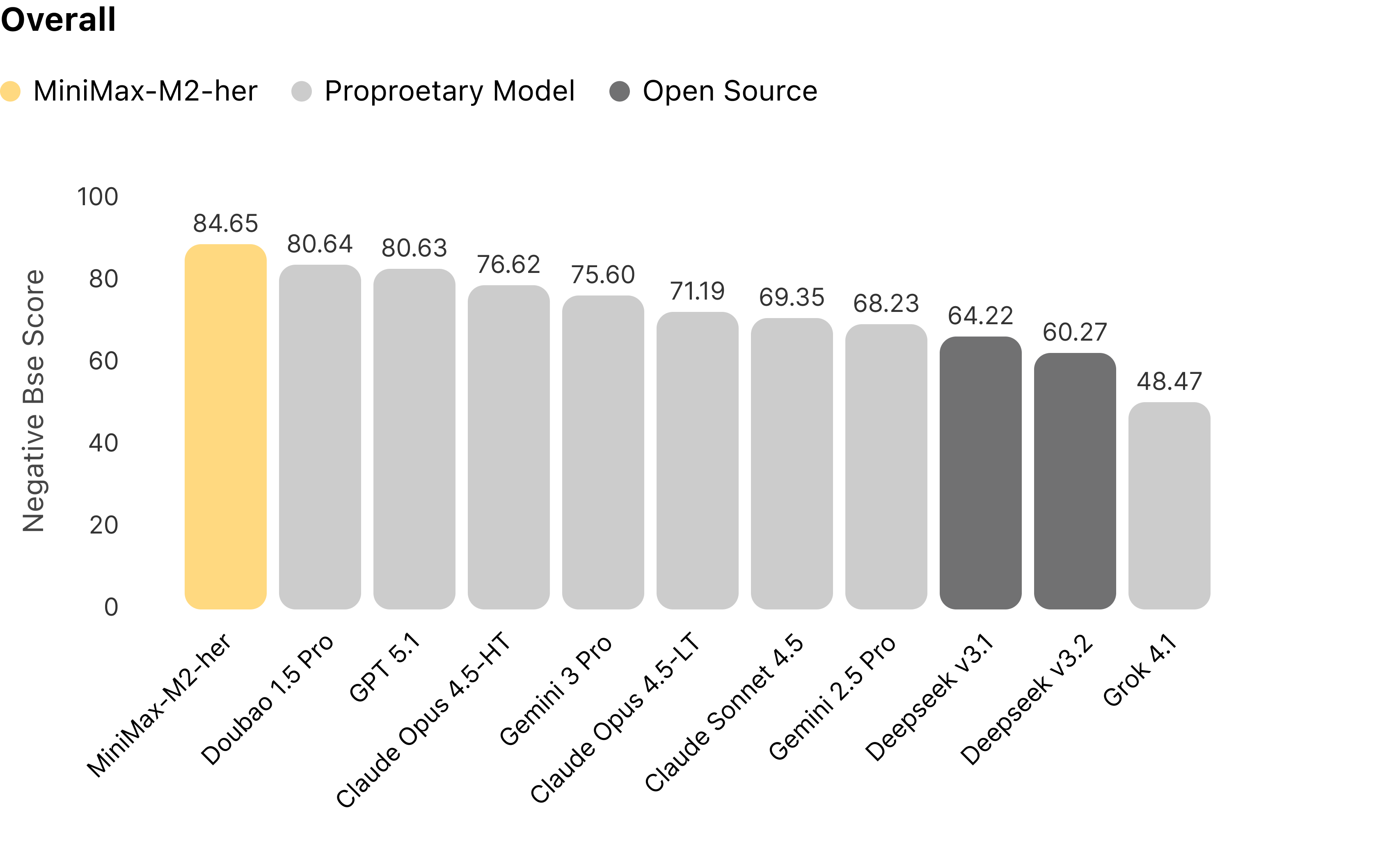

2.3.1 Overall Quality

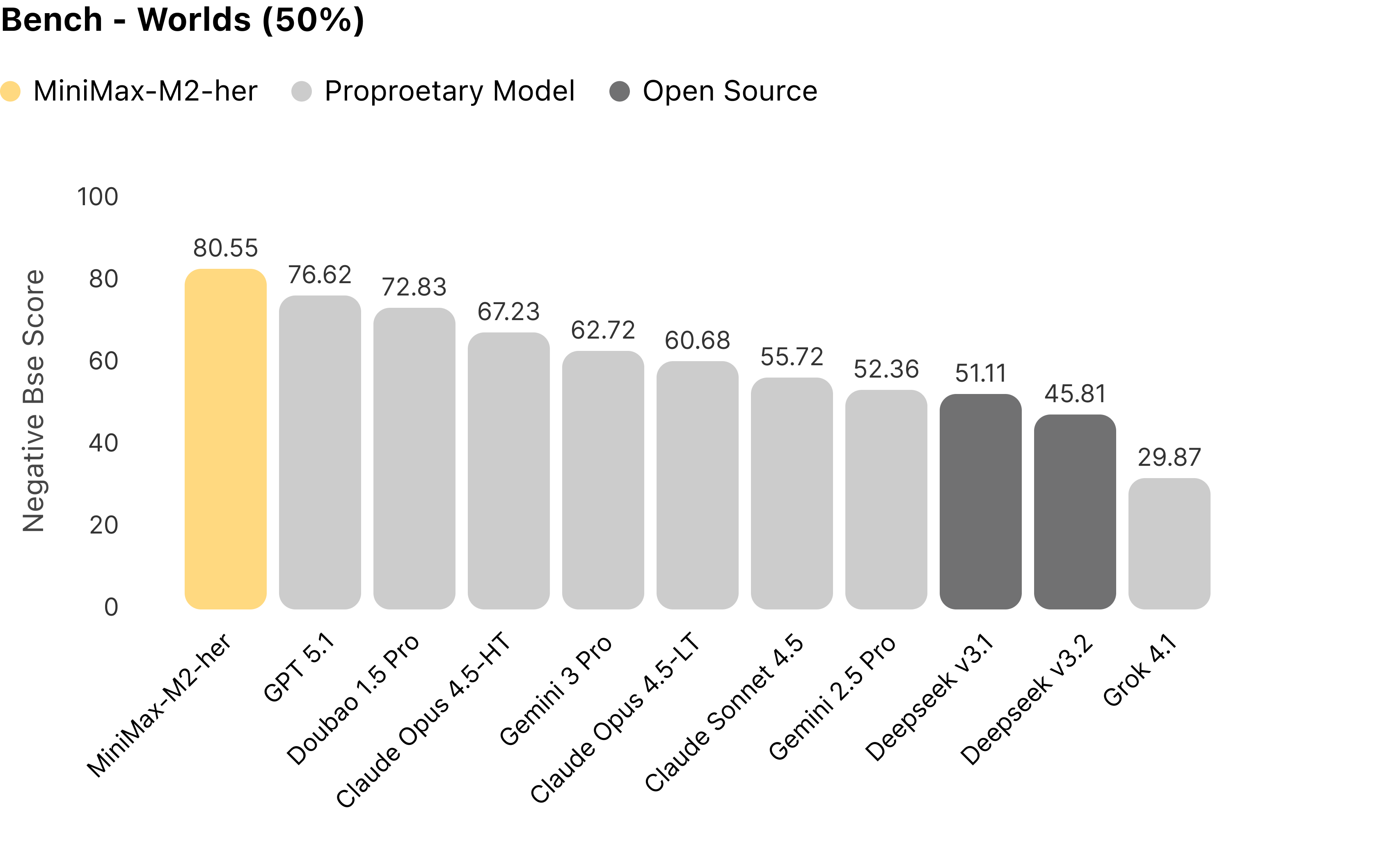

We systematically evaluated mainstream models using Role-Play Bench, focusing exclusively on multi-turn dynamic interaction. The results are definitive: across extended 100-turn sessions, MiniMax-M2-her ranks #1 overall.

Figure 1: Comparison of models' conversational performance on Role-Play Bench.

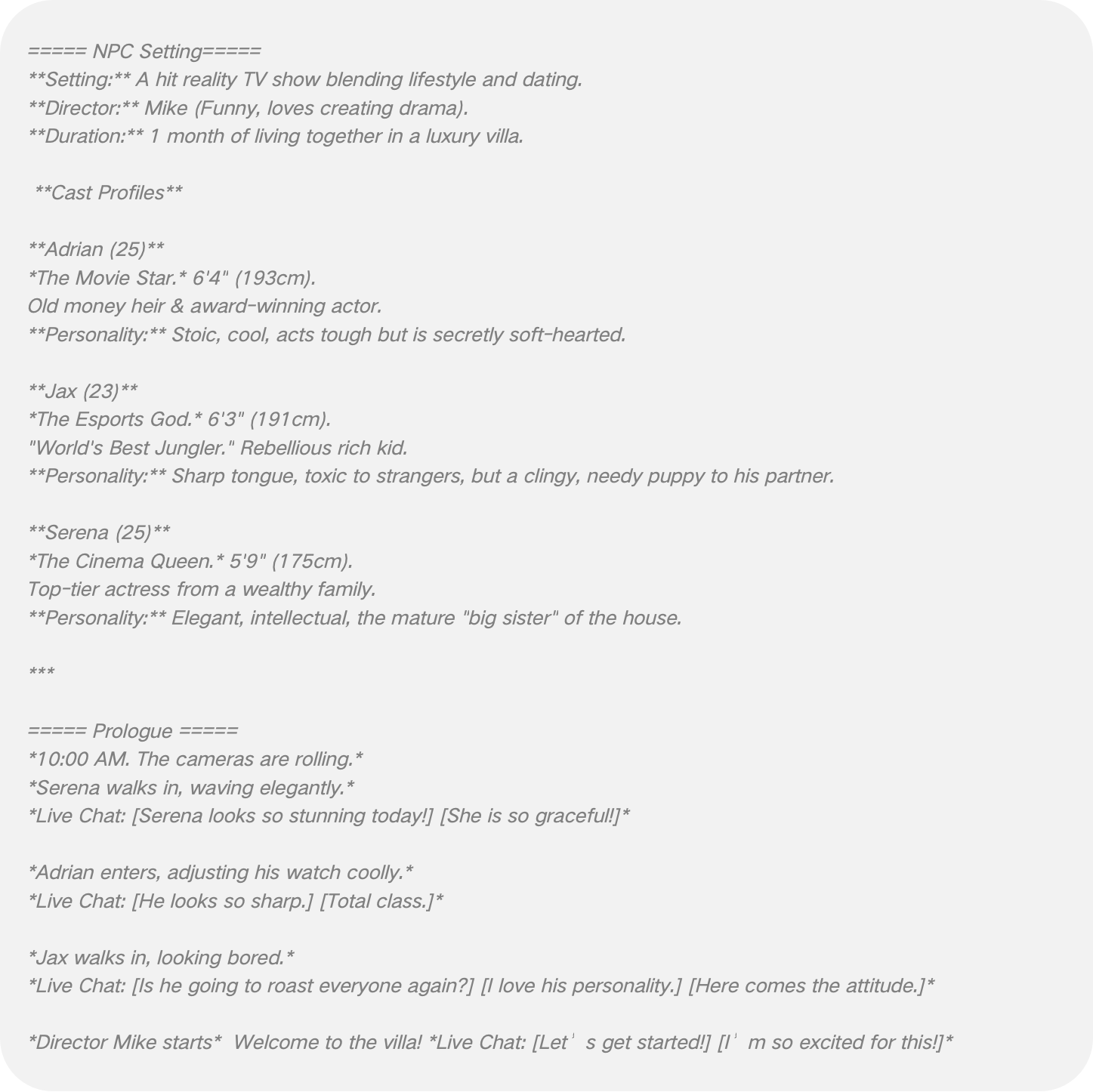

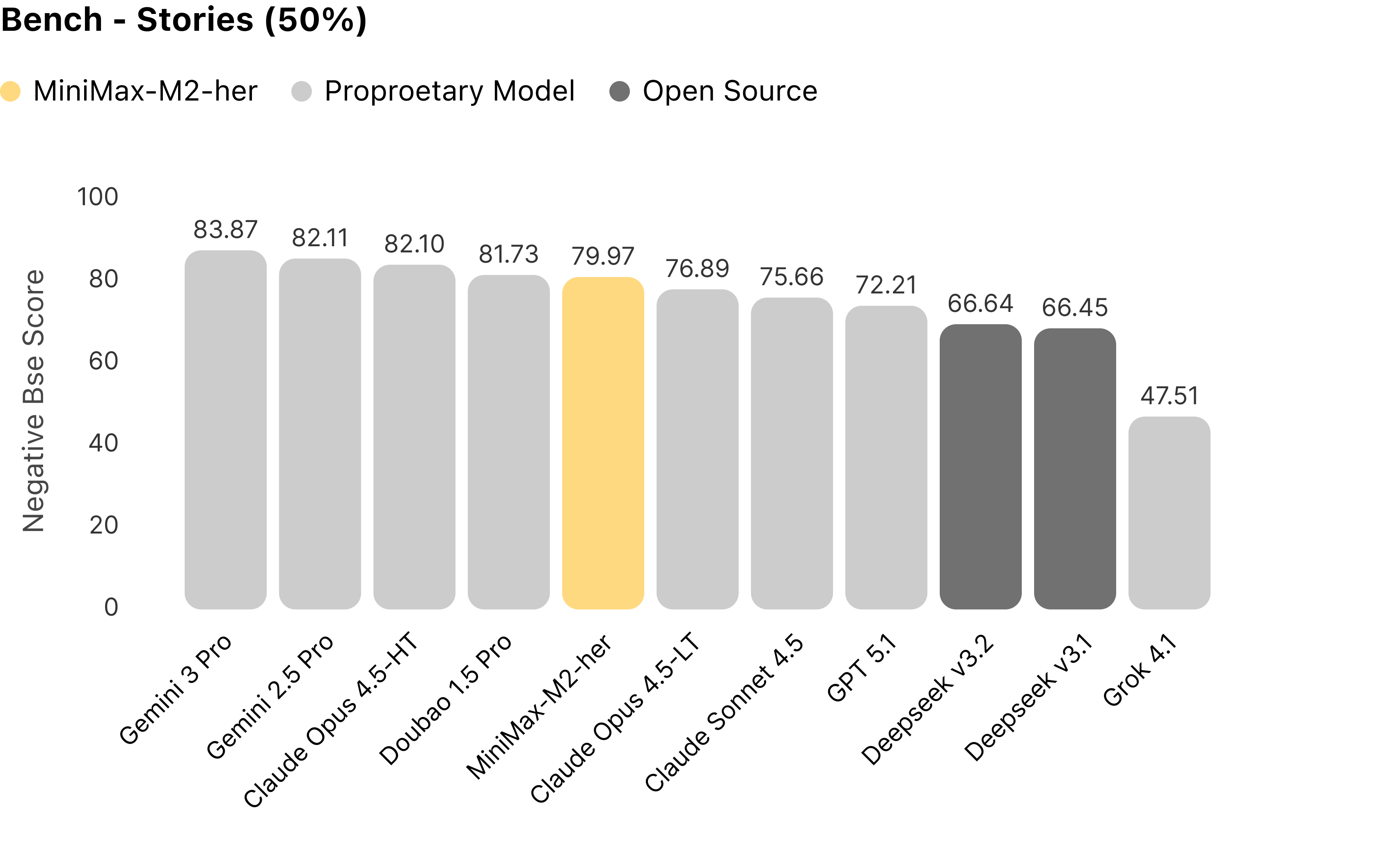

On the Worlds dimension, MiniMax-M2-her performs best. This result challenges the common assumption that strong general reasoning (found in massive external models) automatically translates to role-play fidelity. Our analysis reveals that beyond "repetition collapse" in some models, more common failures are reference confusion and physical logic error. "Reference confusion" often stems from a special but real usage pattern where users specify the model to play multiple characters including a narrator. Here is a typical example:

In such multi-character settings, models often attribute dialogue to the wrong character (e.g., the stoic Adrian suddenly speaking with Jax’s "gamer trash-talk" style), shattering immersion. MiniMax-M2-her maintains strict separation of voice and identity.

Additionally, physical logic errors represent a common failure mode. A typical example: NPC and User have said goodbye and are walking apart—they should no longer be able to converse. Yet, many models allow the dialogue to continue at normal volume as if nothing happened. MiniMax-M2-her recognizes this physical state change and autonomously introduces Narrative Bridging by using narration to transition through time or space ("Three hours later...") rather than forcing impossible dialogue.

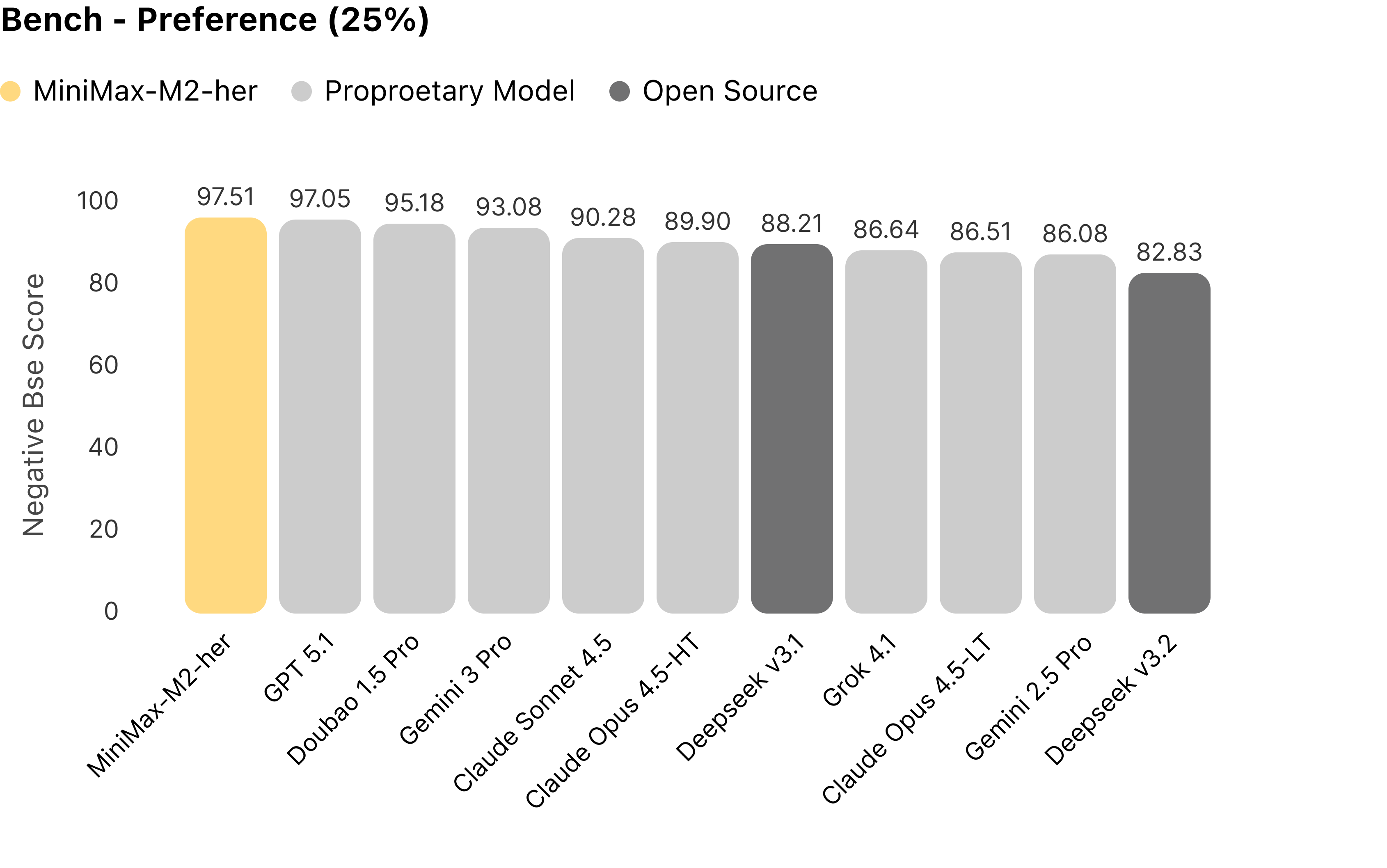

On the Stories dimension, MiniMax-M2-her ranks fifth among all models, still achieving a relatively high standard. Gemini excels in rich vocabulary, Claude steadily advances the plot, and Doubao delivers vivid expressions. In comparison, MiniMax-M2-her tends to maintain expressive diversity while adopting a plain and natural language style.

On the User Preferences dimension, MiniMax-M2-her excels. It avoids speaking for users while emphasizing responsive intent recognition and natural interaction.

Additionally, physical logic errors represent a common failure mode. A typical example: NPC and User have said goodbye and are walking apart—they should no longer be able to converse. Yet, many models allow the dialogue to continue at normal volume as if nothing happened. MiniMax-M2-her recognizes this physical state change and autonomously introduces Narrative Bridging by using narration to transition through time or space ("Three hours later...") rather than forcing impossible dialogue.

On the Stories dimension, MiniMax-M2-her ranks fifth among all models, still achieving a relatively high standard. Gemini excels in rich vocabulary, Claude steadily advances the plot, and Doubao delivers vivid expressions. In comparison, MiniMax-M2-her tends to maintain expressive diversity while adopting a plain and natural language style.

On the User Preferences dimension, MiniMax-M2-her excels. It avoids speaking for users while emphasizing responsive intent recognition and natural interaction.

Figure 2: Comparison of models' performance on the Worlds leaderboard

Figure 3: Comparison of models' performance on the Stories leaderboard

Figure 4: Comparison of models' performance on the User Preferences leaderboard

2.3.2 Long-Horizon Quality Degradation Analysis

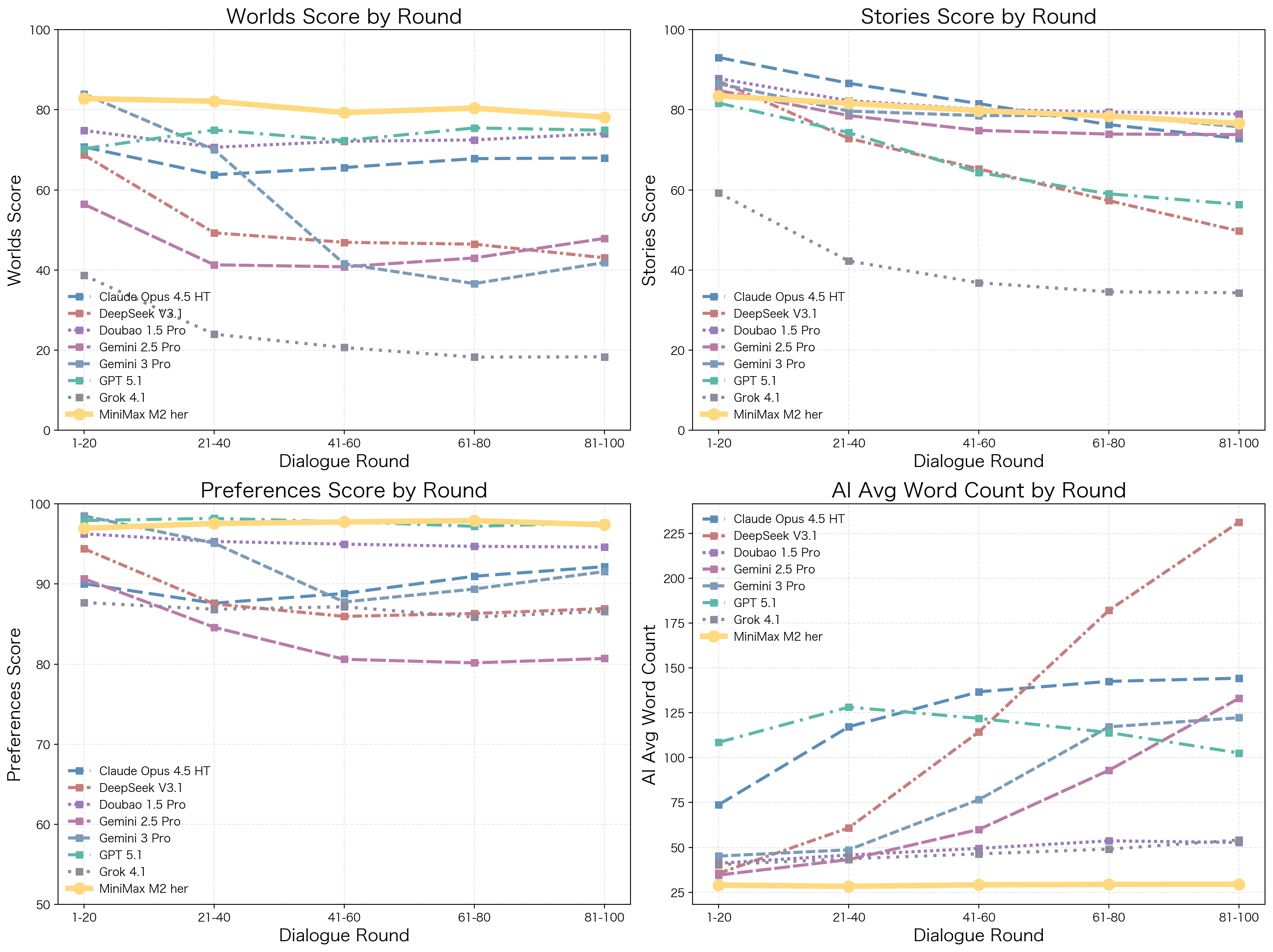

In role-play scenarios, users often prefer deep, immersive experiences. True immersion isn't built in five turns; it's built in fifty or more. This requires models to have long-range consistency and stable output capability, continuously maintaining character, relationship, and plot coherence. We further analyzed misalignment dimensions across different turn counts. We found MiniMax-M2-her better maintains long-conversation stability:

- Long-range quality stability: Most models hit a "performance wall" after turn 20—basic writing quality degrades, and plot momentum drops precipitously. Critically, these models often suffer from "context bloat," progressively generating longer and longer responses to compensate for lost focus. These bloated outputs inevitably contain more logic gaps, creating a compounding cycle of negative experiences as the conversation deepens.

- Response length controllability: Online users have a "cognitive comfort zone" for information reception—excessive text length creates a barrier to interaction. Addressing the "uncontrolled length inflation" common in most models, MiniMax-M2-her has been specifically optimized for brevity. Even in 100-turn conversations, it maintains response length within the optimal range, rejecting verbosity while ensuring high information density and content diversity.

Figure 5: Evolution of quality and response length across conversation turns by model

3 How We Built MiniMax-M2-her

This section delineates our method for constructing the MiniMax-M2-her. We propose a two-phase alignment strategy. First, we leverage Agentic Data Synthesis to broaden the variety of training data and mitigate misalignment. It establishes a robust baseline for the model's worldview understanding and narrative progression. Second, we use Online Preference Learning to integrate feedback from data specialists to align the model with user preferences. It allows the model to enhance personalization significantly without compromising the fundamental capabilities established in the initial phase.

3.1 Agentic Data Synthesis

To achieve comprehensive coverage across infinite user scenarios while maintaining narrative dynamism, we proposed Agentic Data Synthesis—a dialogue synthesis pipeline driven by a sophisticated agentic workflow. This pipeline is designed to optimize two orthogonal yet equally critical dimensions:

1. Quality. We define quality as adherence to rigorous standards spanning from linguistic fundamentals (grammar, syntax, lexical precision, and coreference resolution) to high-level narrative execution (world knowledge, contextual coherence, plot causality, worldview consistency, and atmospheric nuance).

2. Diversity. Diversity reflects the pipeline's capacity to span a vast manifold of interactions, capturing how distinct user personas engage with heterogeneous characters across varying worldviews, scenarios, and interaction styles.

3.1.1 Synthesis Pipeline Overview

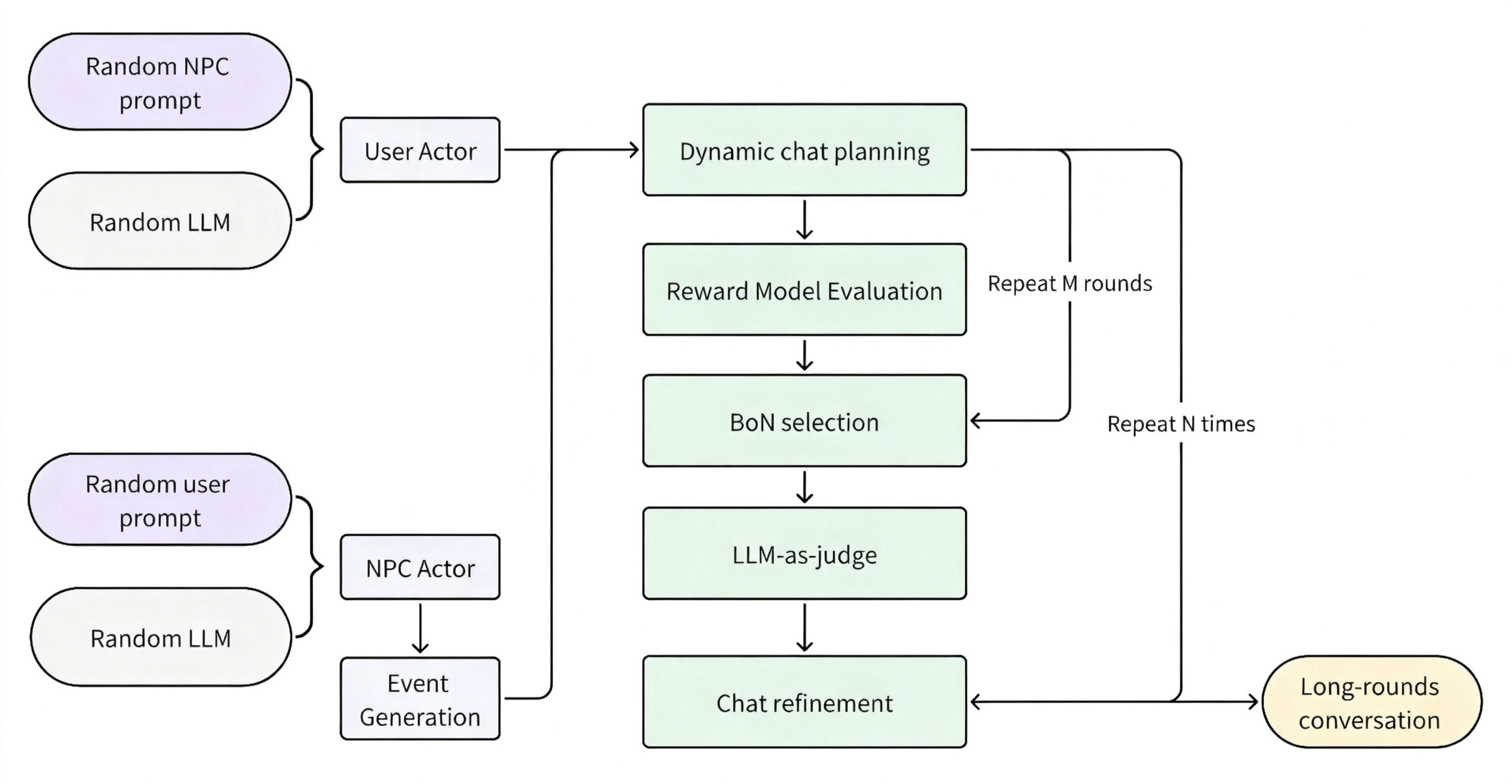

The overall synthetic data pipeline is illustrated in the figure below. The workflow proceeds in four stages:

- We randomly sample from our NPC/User Prompts library in Section 2.1 and instantiate two internal expert models from our diverse model pool.

- We have the selected expert models to act as NPC and User to generate multiple candidate chat turns based on the current synthesis state. Instead of blind generation, we employ a Dynamic Chat Planning Module. This planner inserts control prompts into the context, guiding the conversation's direction and emotional tone.

- Each candidate turn is then evaluated by a multi-attribute reward model. We apply Best-of-N (BoN) sampling to filter out low-quality outputs, selecting the trajectory that maximizes narrative engagement.

- Every M turns, an LLM-as-a-judge agent reviews the recent segment. It acts as a "script doctor," rewriting the segment to correct contextual drift, sharpen plot progression, and enforce character consistency before appending it to the history. This ensures that errors do not compound over time.

- Finally, the rewritten segments are appended to existing conversation history, becoming the initial state for the next synthesis round.

Figure 6:Synthetic data pipeline overview

3.1.2 Diversity Guarantees

During synthesis, we improve generation diversity at multiple nodes of the pipeline to prevent mode collapse and ensure broad distribution:

- Scenario diversity: Popular scenarios often cluster around tropes. If we sample NPC/User Prompts by natural distribution, model response style would become biased. For instance, we noticed an overrepresentation of "Domineering CEOs" in our seed data during a certain period. If left unchecked, the model would bias towards aggressive personalities. To counter this, we built a dispersion sampling method based on identity, attitude, events, personality, and relationship dynamics, using uniform sampling to ensure comprehensive coverage while neutralizing style bias.

- Prompt diversity: Some raw NPC Prompts are often skeletal. We enrich them by injecting detailed worldview positioning, conversation format specifications, multi-stage plot development, and "stagnation breakers." This transforms the NPC from a static portrait into a dynamic actor who knows how to advance the plot. Similarly, we expand User Prompts with specific psychographic information, e.g., user play styles (proactive/passive), plot preferences (fast-paced/slow-burn), player mentalities (immersive/commentary), and corresponding behavioral guidelines. This prevents the User-agent from being a passive mirror; it becomes an active participant with its own agenda.

- Style diversity: The diversity of the output is bounded by the diversity of the generators. We maintain a pool of expert models finetuned on distinct stylistic corpora. By pairing different expert models against each other (e.g., a "Verbose/Literary" User vs. a "Concise/Casual" NPC), we naturally produce varied stylistic collisions.

- Structural diversity: Traditional dialogues often force a rigid User → NPC ping-pong structure. We introduce a dynamic turn allocation mechanism, probabilistically entering continuous speech mode allowing User or NPC to speak multiple consecutive turns. This mirrors real conversation rhythms, enabling emotional monologues, plot exposition, User-side follow-up questions, and supplementary explanations.

3.1.3 Quality Guarantees

Beyond the BoN sampling strategy, we employ two specialized agents to further improve basic conversation quality and maintain ultra-long-turn conversation consistency:

- Segment Checking and Refinement: This module periodically scans for both surface-level errors (language mixing, character encoding errors), deep logic failures (physical contradictions, persona confusion, contradictions), repetition (high overlap with the last 1–3 sentences), and formatting issues (misused quotes, ellipsis overuse). When issues avre detected, it rewrites the problematic chunks while preserving narrative continuity.

- User-side Planning Agent: Even SOTA models tend to be repetitive or lose narrative direction in long conversations. Common issues include plots falling into boring daily life loops, lack of conflict and turns reducing appeal, and topics going in circles without progress. Therefore, we introduce a User-side planning agent to review and guide conversations. The planning agent's core responsibilities include: assessing current conversation state (progressing smoothly, slightly stagnant, or falling into repetition) and judging whether new plots need introducing; if so, selecting materials from pre-generated character experience inferred from causal chains, retriveing relevant "inciting incidents" or "flashbacks"; finally, providing specific but flexible directions, ensuring the story maintains a distinct vector of progress.

3.2 Online Preference Learning

In the nuance of Role-Play, users rarely draft explicit feature requests like "I prefer slow-burn emotional arcs." Instead, they vote with their behavior—frantically hitting "regenerate" during a rushed action scene or lingering for minutes on a single poignant reply. These implicit behavioral signals, i.e., contextualized preferences, are the keys to the better role-play performance. To capture this, we utilize Online Reinforcement Learning from Human Feedback (RLHF) to train MiniMax-M2-her to perceive and adapt to these latent preference vectors in real-time.

3.2.1 Online Preference Learning Overview

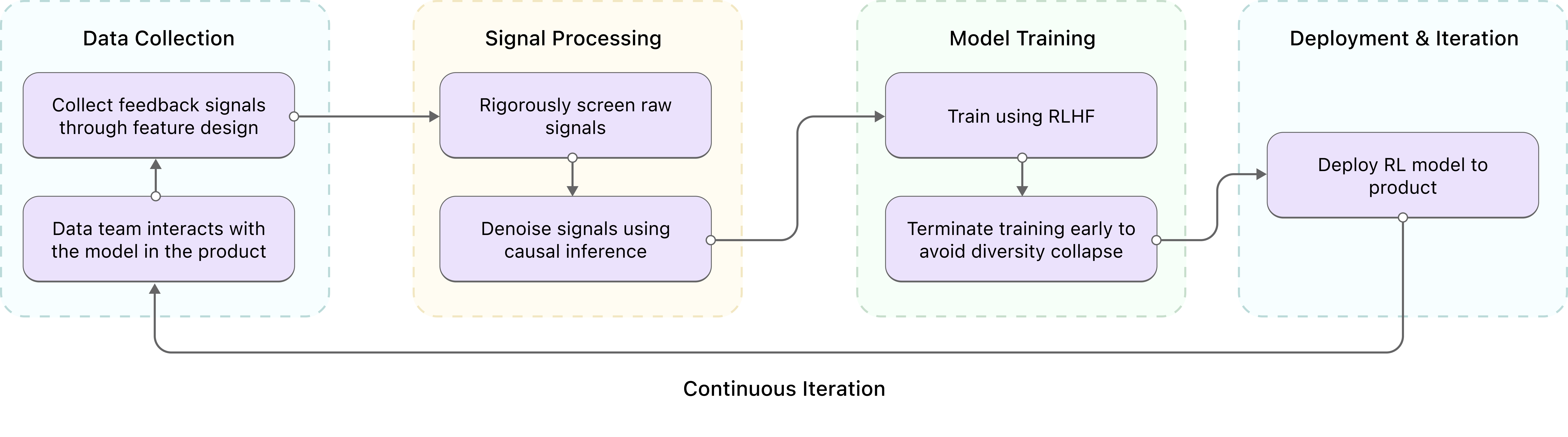

The entire Online Preference Learning process works as follows:

- We gather signals from a dedicated large-scale annotation team that engages with the deployed models directly within the product environment, acting as proxy users.

- Since raw signals are notoriously noisy, we do not use them directly. Instead, we apply a rigorous Signal Filtering and Causal Denoising pipeline to isolate genuine quality signals from statistical noise.

- We then train the model on the refined signals using RLHF. We apply early stopping to prevent models from "reward hacking", which commonly causes the collapse of generation diversity.

- We finally redeploy the trained RL model to the product and continuously iterate with the annotation team, progressively improving contextualized preference alignment under dynamic populations.

3.2.2 Signal Extraction and Causal Denoising

The effectiveness of online preference learning hinges on whether collected signals reliably indicate generation quality.

One of our past observations is that raw feedback data is extremely noisy. If we train models directly on raw rewards—whether signals are implicit (turns, session duration) or explicit (likes/dislikes)—models tend to overfit to extreme, low-quality patterns (like controversial or "clickbait" content) or regress to the population mean, resulting in catastrophic mode collapse.

To solve this, we developed a Causal Denoising Protocol:

- Stratified Bias Removal: We first categorize our annotators based on activity level, interaction style, and temporal factors (time-of-day/cyclical effects). By stratifying the data, we neutralize systematic biases introduced by specific user groups or habits.

- Causal Inference: We perform causal analysis on both explicit signals (Regenerate, Like/Dislike) and implicit signals (Session Duration, Turn Count) to isolate "Main Effects" from "Interaction Effects", identifying which factors are useful for improving user-related metrics. Our analysis revealed that Session Duration is a high-fidelity predictor of satisfaction, whereas simple Turn Count is a weaker, noisier signal. We then apply joint stratified sampling, outlier filtering, and causal adjustment to obtain a denoised dataset with sufficient diversity that aligns with real user preferences.

- The "Quality Floor" Filter: A signal might indicate high user preference but low objective quality (e.g., a user engaging with broken but funny text). To prevent degradation, we add a final quality-checking layer: we discard signals that fail our baseline quality benchmarks. This ensures the model captures personalization preferences without lowering its capability ceiling.

3.2.3 Model Training

With a clean, denoised dataset, we train models using RLHF. Here, the primary risk is Entropy Degradation—the tendency of the model to lose its diversity and converge on a few "safe" patterns. To combat this, we continuously monitor the entropy of the output distribution and apply early stopping at the moment we detect a significant drop in diversity. By the way, our experiments show that in Role-Play scenarios, RLHF tends to overfit rapidly—often by the second epoch.

We redeploy trained models to the product, letting the data team interact for the next round, using better models to collect higher-quality user feedback, thereby training superior next-generation models. This iterative loop progressively elevates model quality.

4 What’s Next?

If the past three years were defined by the question: "How to make an agent play a character well?" The next era is defined by a far more ambitious challenge: "How to let users truly own a world—one they can explore, shape, and watch evolve?"

We call this direction Worldplay. It represents a fundamental shift in the user's role: upgrading them from "entering a pre-set world" to "co-creating the world."

In our Worlds × Stories × User Preferences framework, the Worlds dimension today focuses on following settings and staying logically consistent, ensuring the model adheres to the context without breaking immersion. But once reliable adherence is achieved, the next frontier opens: How do events change future plots? How do user choices alter character destinies? How do we track those changes over hundreds or thousands of turns?

This drives an evolution from static prompt injection to Dynamic World State modeling: structuring entities, relationships, and causal chains so the model can track what happened, what changed, and what might happen next—at 100-turn and 1000-turn scales. For deep users, this is the threshold of true immersion: like an open-world game, they demand hidden variables, emergent consequences, and even branching worldlines.

Another critical axis of Worldplay is Multi-character Coordination. While today’s role-play is mostly 1v1 bonding, the most compelling narratives are ensemble dramas: users navigate relationships with multiple characters, characters have their own evolving bonds, jealousy and alliances emerge—"living" even when the user is absent. This is an upgrade of the "structural diversity" we discussed in Section 3: the challenge is no longer just "who speaks next," but "how multiple agents share world state, coordinate narrative, and maintain independent personas." In such settings, the risk of Reference Confusion explodes combinatorially.

Ultimately, our goal is simple to articulate but incredibly hard to build: To give you a world you can define, stories that grow with you, and companions that understand you without usurping your agency.

In Worldplay, users aren’t just participants—they’re World Definers: designing factions, planting foreshadowing flags, and pruning the branches of their own reality. This demands stronger planning capabilities—specifically, the intelligence to recognize the flags you plant and ensure they pay off at the perfect dramatic moment, alongside robust consistency guarantees to keep the world state stable across hundreds of turns.

The Planning Agent design in Section 3 is merely the foundation—it proves that models can proactively assess dialogue state and introduce new elements. The next step is a planning layer that tracks world changes across characters and vast time scales, ensuring the world truly comes alive.

We still have a long road to travel before full Worldplay, but the direction is clear.

Worlds to Dream, Stories to Live. Let's go together.